Hey hey. Hope your 2026 is off to a great start. We just kicked off the AI PM Accelerator and it's been all-consuming in the best way.

I've been wanting to write this one for a while because I think AI product strategy is the most important skill a PM can build right now. And the challenge is that AI strategy is NOT the same as non AI product strategy.

AI Product Strategy is what decides whether your AI feature actually works for users or just sits there looking impressive in a demo.

And it comes down to three questions:

Question 1: Where Do You Play?

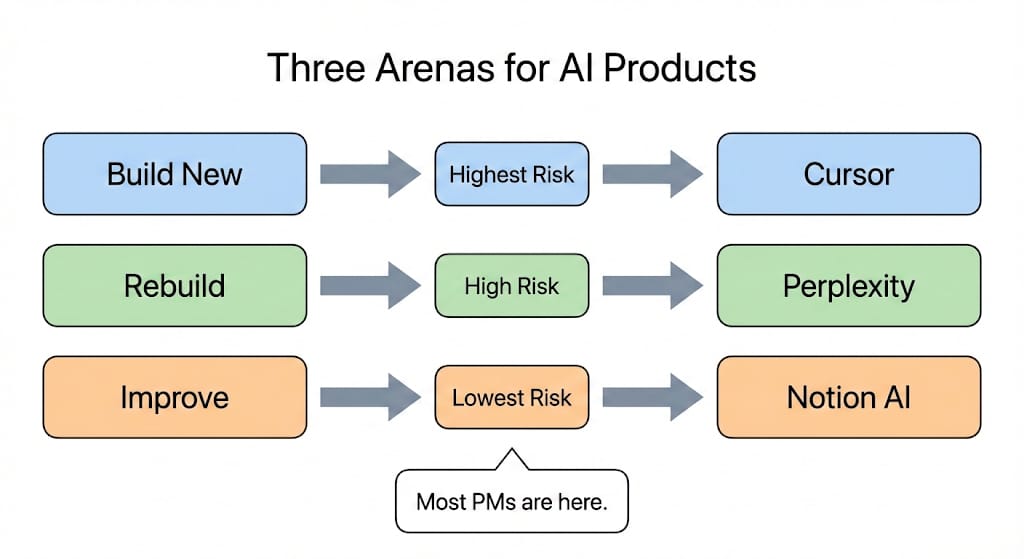

There are three different games you can play with AI products. Each one works differently. You need to know which one you're in.

Arena | What It Means | Example | The Bet |

|---|---|---|---|

Build New | You're creating something that didn't exist before AI made it possible | Cursor built an AI-native code editor. It went from $0 to $1B in annual revenue in about 24 months. | If you're right, you own the category. If you're wrong, you have nothing to fall back on. |

Rebuild | You're taking something that already exists and making it 10x better with AI | Perplexity rebuilt search from scratch. No blue links. No ads. Just answers. Approaching $200M in revenue. | You're going up against companies with way more users and money. Your version needs to be obviously better. |

Improve | You're adding AI to a product that already works | Notion AI. Same product, same users. But now it can write, summarize, and search better. | Lowest risk. Your product already works. AI just makes it better. |

Look at these three for a second. Cursor didn't add AI to VS Code. It built a whole new editor. Perplexity didn't put a chatbot on top of Google. It threw out everything Google does and started over. Notion just made its existing product smarter.

All three are working. But they're playing completely different games.

The mistake is playing the wrong one. If you're adding AI to an existing product but acting like you're building a new category, you'll spend too much money and confuse your users. If you're trying to create a new category but playing it safe, someone bolder will beat you.

Most PMs reading this are in the Improve lane. That's a good place to be. It's where the most consistent value gets created. But you need to know that's where you are, because the team you need, the money you'll spend, and how you'll measure success all depend on it.

Once you know which game you're playing, the second question gets a lot clearer.

Question 2: Is It Real or Cosmetic?

OK so you know your arena. Now: does the AI feature you're building actually change anything for the user?

Cosmetic AI | Real AI |

|---|---|

AI is added to the product but the experience barely changes | AI changes how users get their job done |

The workflow is the same as before | Users can't go back to the old way |

Remove the AI and nobody notices | Remove the AI and the product feels broken |

An "AI insights" tab that nobody opens | Gmail's Smart Compose, which changes how you write emails |

Here's a simple test. Take the AI feature out of your product tomorrow. Is the product meaningfully worse? If users wouldn't even notice, you've built something cosmetic.

Think about Perplexity again. It didn't add AI to search. It made search into something completely different. You type a question, you get an answer with sources. No scrolling through ten blue links. That's real AI.

Now compare that to a product that adds a "summarize with AI" button to a dashboard nobody was using in the first place. Same technology. But one changes behavior and the other is a sticker.

💡 Pro Tip: If you need a tutorial to explain why your AI feature is useful, it's probably cosmetic. Real AI features make sense the first time someone uses them.

This question keeps you honest. It's easy to build something because AI can do it. The harder question is whether anyone actually needs it.

Question 3: Are You Ready?

This one is uncomfortable. Not every team is ready to build what they want to build. And the gap between where you are and where you think you are is where most AI projects go to die.

Think of it as three stages.

Stage | What It Looks Like | What You Should Be Doing |

|---|---|---|

Crawl | You're using off-the-shelf models like GPT or Claude. Basic prompts. Small experiments. | Run experiments. Learn what works and what doesn't. Don't build anything custom yet. |

Walk | You're building RAG systems, fine-tuning models, putting governance in place. | Build real infrastructure. Figure out how to measure quality. Scale the things that are working. |

Run | Custom models, multi-agent systems, AI across the whole company. | Optimize and scale. Build things your competitors can't easily copy. |

If your team is at Crawl and you try to jump straight to Run, you'll spend months building things you can't maintain. If you're at Walk and still acting like you're at Crawl, you'll miss your chance to grow what's already working.

Be honest about where you are. Build from there.

Here's why this question matters so much. It connects to the first two. If you're in the Improve arena and your team is at Crawl, your first version should be simple: one off-the-shelf model doing one job well. If you're building something new and your team is at Run, you can go after something bigger.

The three questions work together. Your arena sets the ambition. Real vs. cosmetic sets the bar. Maturity sets the scope.

And once you have answers to all three, something practical changes: the way you prioritise

How AI Breaks Your Prioritization

You probably already have a way of deciding what to build. RICE scores, impact vs. effort, weighted scorecards.

They all assume something: that you can roughly estimate cost to build.

With AI, you can't. Here's why.

You can't predict the timeline. You might spend three months experimenting and end up with nothing you can ship. That's not failure. It's how AI development works. But your sprint plan won't like it.

It's never done. A normal feature ships and you move on. An AI feature ships and then you watch it forever. Users change their behavior. The data shifts. Something that worked great last month starts giving bad answers this month.

Most costs suface later. This is why AI features blow past their budgets. The costs you see upfront — design, engineering, API calls, QA — are only about 20-40% of what you'll actually spend. The other 60-80% is underwater. Data preparation, pipelines, guardrails, edge case handling. The true cost of an AI feature is usually 3-5x your initial estimate. This makes estimating costs upfront really tough.

The real costs of building AI is hard to estimate upfront

The last mile costs a fortune. Getting from 90% accuracy to 95% might take two weeks. Getting from 95% to 99% might take six months and 10x the budget. Sometimes that last bit of quality isn't worth the cost. That's a product call, not an engineering one.

Adjusting Your ROI

When you estimate the return on an AI feature, add about 70% of the ongoing AI costs (monitoring, retraining, data maintenance) on top of your initial build estimate. If your team is at Crawl, it might be even higher because everything is new. If you're at Run, it's lower because you've already built shared tools.

The specific number is less important than the habit. AI features cost more to maintain than normal features. If you forget that, you'll keep greenlighting AI work that your team can't sustain.

Let's put this all together.

Pressure Test: The Internal AI Assistant

Here's a real scenario. Every concept from this post shows up in it.

The setup: You're a PM at a 500-person company. People waste hours every week digging through Notion, Google Drive, and Slack for answers. Leadership wants an AI assistant that employees can ask questions. You have 2 engineers and 3 months.

Before you scope a single thing, run the three questions.

Where do you play? Improve. You're making an existing workflow (finding internal info) better with AI.

Real or cosmetic? It could be real, but only if employees actually change their behavior and start asking the assistant instead of searching manually. That's your bar.

Are you ready? Two engineers, three months. That's Crawl. You're using off-the-shelf tools. Tight scope.

Now the product decisions come naturally.

What's v1? One data source. Pick whichever has the cleanest, most current content. Connecting three tools means three times the unknowns and three times the edge cases. You're at Crawl. Keep it small. Learn first.

What if the AI doesn't know the answer? It says "I don't know" and links to the original document. Don't let it guess. Let users ask a human instead. Trying to answer everything leads to made-up answers. Honest is better than wrong.

What about outdated or conflicting docs? Show the source and when it was last updated. Let the user decide. Add a button: "Was this helpful? Flag as outdated." Now you have a feedback loop.

What do you measure after launch? Questions the AI couldn't answer (these are gaps in your docs). Thumbs-down ratings. Which sources get used most. Whether accuracy holds up or drops over time.

See the pattern? One source because you're at Crawl. A clear definition of "good enough" because you know the difference between real and cosmetic. Ongoing monitoring because AI features are never done.

That's what it looks like when strategy turns into product decisions.

Key Takeaways

Strategy is the skill. The decisions you make before you pick a model matter more than the model itself.

Know your arena. Build New, Rebuild, and Improve are different games. Most teams are in Improve. That's fine. Own it.

Test for cosmetic AI. If you removed the AI and users didn't care, it's cosmetic. Start over.

Be honest about readiness. Crawl, Walk, Run. Match your plan to what your team can actually do today.

AI features cost more forever. Add data readiness, infrastructure, and monitoring to every estimate. If you don't, you'll promise things your team can't deliver.

The best AI PMs I work with don't have better technical skills than everyone else. They just have clearer answers to these three questions.

That was it for this time.

See you next week

—Sid